The Linux operating system calls a running program a process. A process can run in the foreground, displaying output on a display, or it can run in the background, behind the scenes. The kernel controls how the Linux system manages all the processes running on the system.

The kernel creates the first process, called the init process, to start all other processes on the system. When the kernel starts, it loads the init process into virtual memory. As the kernel starts each additional process, it allocates to it a unique area in virtual memory to store the data and code that the process uses.

Most Linux implementations contain a table (or tables) of processes that start automatically on boot-up. This table is often located in the special file /etc/inittabs. However, the Ubuntu Linux system uses a slightly different format, storing multiple table files in the /etc/event.d folder by default.

The Linux operating system uses an init system that utilizes run levels. A run level can be used to direct the init process to run only certain types of processes, as defined in the /etc/inittabs file or the files in the /etc/event.d folder. There are seven init run levels in the Linux operating system. Level 0 is for when the system is halted, and level 6 is for when the system is rebooting. Levels 1 through 5 manage the Linux system while it’s operating.

At run level 1, only the basic system processes are started, along with one console terminal process. This is called Single User mode. Single User mode is most often used for emergency filesystem maintenance when something is broken. Obviously, in this mode only one person (usually the administrator) can log into the system to manipulate data. The standard init run level is 3. At this run level most application software, such as network support software, is started. Another popular run level in Linux is 5. This is the run level where the system starts the graphical X Window software and allows you to log in using a graphical desktop window.

The Linux system can control the overall system functionality by controlling the init run level. By changing the run level from 3 to 5, the system can change from a console-based system to an advanced, graphical X Window system. Here are a few lines extracted from the output of the ps command:

test@testbox~$ ps ax

PID TTY STAT TIME COMMAND

1 ? Ss 0:01 /sbin/init

2 ? S< 0:00 [kthreadd]

3 ? S< 0:00 [migration/0]

4 ? S< 0:00 [ksoftirqd/0]

5 ? S< 0:00 [watchdog/0]

4708 ? S< 0:00 [krfcommd]

4759 ? Ss 0:00 /usr/sbin/gdm

4761 ? S 0:00 /usr/sbin/gdm

4814 ? Ss 0:00 /usr/sbin/atd

4832 ? Ss 0:00 /usr/sbin/cron

4920 tty1 Ss+ 0:00 /sbin/getty 38400 tty1

5417 ? Sl 0:01 gnome-settings-daemon

5425 ? S 0:00 /usr/bin/pulseaudio --log-target=syslog

5426 ? S 0:00 /usr/lib/pulseaudio/pulse/gconf-helper

5437 ? S 0:00 /usr/lib/gvfs/gvfsd

5451 ? S 0:05 gnome-panel --sm-client-id default1

5632 ? Sl 0:34 gnome-system-monitor

5638 ? S 0:00 /usr/lib/gnome-vfs-2.0/gnome-vfs-daemon

5642 ? S 0:09 gimp-2.4

6319 ? Sl 0:01 gnome-terminal

6321 ? S 0:00 gnome-pty-helper

6322 pts/0 Rs 0:00 bash

6343 ? S 0:01 gedit

6385 pts/0 R+ 0:00 ps ax

$

The first column in the output shows the process ID (or PID) of the process. Notice that the first process is our friend, the init process, which is assigned PID 1 by the Ubuntu system. All other processes that start after the init process are assigned PIDs in numerical order. No two processes can have the same PID.

The third column shows the current status of the process. The first letter represents the state the process is in (S for sleeping, R for running). The process name is shown in the last column. Processes that are in brackets have been swapped out of memory to the disk swap space due to inactivity. You can see that some of the processes have been swapped out, but the running processes have not.

Source of information : Wiley Ubuntu Linux Secrets

Monday, August 31, 2009

Sunday, August 30, 2009

The Linux Kernel - System Memory Management

One of the primary functions of the operating system kernel is memory management. Not only does the kernel manage the physical memory available on the server, it can also create and manage virtual memory, or memory that does not actually exist. It does this by using space on the hard disk, called the swap space. The kernel swaps the contents of virtual memory locations back and forth from the swap space to the actual physical memory. This process allows the system to think there is more memory available than what physically exists.

The memory locations are grouped into blocks called pages. The kernel locates each page of memory in either the physical memory or the swap space. It then maintains a table of the memory pages that indicates which pages are in physical memory and which pages are swapped out to disk.

The kernel keeps track of which memory pages are in use and automatically copies memory pages that have not been accessed for a period of time to the swap space area (called swapping out). When a program wants to access a memory page that has been swapped out, the kernel must make room for it in physical memory by swapping out a different memory page and swap in the required page from the swap space. Obviously, this process takes time, and it can slow down a running process. The process of swapping out memory pages for running applications continues for as long as the Linux system is running. You can see the current status of the memory on a Ubuntu system by using the System Monitor utility.

The Memory graph shows that this Linux system has 380.5 MB of physical memory. It also shows that about 148.3 MB is currently being used. The next line shows that there is about 235.3 MB of swap space memory available on this system, with none in use at the time. By default, each process running on the Linux system has its own private memory pages. One process cannot access memory pages being used by another process. The kernel maintains its own memory areas. For security purposes, no processes can access memory used by the kernel processes. Each individual user on the system also has a private memory area used for handling any applications the user starts. Often, however, related applications run that must communicate with each other. One way to do this is through data sharing. To facilitate data sharing, you can create shared memory pages.

A shared memory page allows multiple processes to read and write to the same shared memory area. The kernel maintains and administers the shared memory areas, controlling which processes are allowed access to the shared area. The special ipcs command allows us to view the current shared memory pages on the system. Here’s the output from a sample ipcs command:

test@testbox:~$ ipcs -m

------ Shared Memory Segments --------

key shmid owner perms bytes nattch status

0x00000000 557056 test 600 393216 2 dest

0x00000000 589825 test 600 393216 2 dest

0x00000000 622594 test 600 393216 2 dest

0x00000000 655363 test 600 393216 2 dest

0x00000000 688132 test 600 393216 2 dest

0x00000000 720901 test 600 196608 2 dest

0x00000000 753670 test 600 393216 2 dest

0x00000000 1212423 test 600 393216 2 dest

0x00000000 819208 test 600 196608 2 dest

0x00000000 851977 test 600 393216 2 dest

0x00000000 1179658 test 600 393216 2 dest

0x00000000 1245195 test 600 196608 2 dest

0x00000000 1277964 test 600 16384 2 dest

0x00000000 1441805 test 600 393216 2 dest

test@testbox:~$

Each shared memory segment has an owner that created the segment. Each segment also has a standard Linux permissions setting that sets the availability of the segment for other users. The key value is used to allow other users to gain access to the shared memory segment.

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

The memory locations are grouped into blocks called pages. The kernel locates each page of memory in either the physical memory or the swap space. It then maintains a table of the memory pages that indicates which pages are in physical memory and which pages are swapped out to disk.

The kernel keeps track of which memory pages are in use and automatically copies memory pages that have not been accessed for a period of time to the swap space area (called swapping out). When a program wants to access a memory page that has been swapped out, the kernel must make room for it in physical memory by swapping out a different memory page and swap in the required page from the swap space. Obviously, this process takes time, and it can slow down a running process. The process of swapping out memory pages for running applications continues for as long as the Linux system is running. You can see the current status of the memory on a Ubuntu system by using the System Monitor utility.

The Memory graph shows that this Linux system has 380.5 MB of physical memory. It also shows that about 148.3 MB is currently being used. The next line shows that there is about 235.3 MB of swap space memory available on this system, with none in use at the time. By default, each process running on the Linux system has its own private memory pages. One process cannot access memory pages being used by another process. The kernel maintains its own memory areas. For security purposes, no processes can access memory used by the kernel processes. Each individual user on the system also has a private memory area used for handling any applications the user starts. Often, however, related applications run that must communicate with each other. One way to do this is through data sharing. To facilitate data sharing, you can create shared memory pages.

A shared memory page allows multiple processes to read and write to the same shared memory area. The kernel maintains and administers the shared memory areas, controlling which processes are allowed access to the shared area. The special ipcs command allows us to view the current shared memory pages on the system. Here’s the output from a sample ipcs command:

test@testbox:~$ ipcs -m

------ Shared Memory Segments --------

key shmid owner perms bytes nattch status

0x00000000 557056 test 600 393216 2 dest

0x00000000 589825 test 600 393216 2 dest

0x00000000 622594 test 600 393216 2 dest

0x00000000 655363 test 600 393216 2 dest

0x00000000 688132 test 600 393216 2 dest

0x00000000 720901 test 600 196608 2 dest

0x00000000 753670 test 600 393216 2 dest

0x00000000 1212423 test 600 393216 2 dest

0x00000000 819208 test 600 196608 2 dest

0x00000000 851977 test 600 393216 2 dest

0x00000000 1179658 test 600 393216 2 dest

0x00000000 1245195 test 600 196608 2 dest

0x00000000 1277964 test 600 16384 2 dest

0x00000000 1441805 test 600 393216 2 dest

test@testbox:~$

Each shared memory segment has an owner that created the segment. Each segment also has a standard Linux permissions setting that sets the availability of the segment for other users. The key value is used to allow other users to gain access to the shared memory segment.

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

Saturday, August 29, 2009

Pay-to-Use Software

With pay-to-use software, you visit your local computer store or a big name chain store, find a box containing the software you want to install on your computer, and your bank account balance is then lowered anywhere from $20.00 to $600.00. Another version of pay-to-use software is found when you visit a company’s web site, provide your credit card information, and then download the software immediately using your broadband connection. (Sometimes you have to wait for the company to actually send you the software on a disc—anxiously checking your mailbox daily for its arrival.) With the downloadable software, you get no CD/DVD disc, no printed manual, and no pretty box with color graphics on the front. Would you believe, however, that you’re probably charged the same amount of money as if you’d purchased the boxed version from a brick-and-mortar store? It’s happened to me! You’ll typically get no explanation for why you’re not getting a discount for saving them packing and shipping costs.

Finally, there’s another version of pay-to-use that’s even more devious: subscription software. You pay a one-time product initiation fee (typically large) to get the software and then you pay a weekly/monthly/yearly subscription fee (sometimes also referred to as a maintenance fee) to keep using the software.

Believe it or not, the current rumors buzzing around the technology world are that subscription software is the future of software: you won’t buy software anymore; you’ll “rent” it. Miss a payment and that software might very well uninstall itself from your computer, requiring a completely new purchase (with that large product initiation fee) before starting up with the subscription fees again.

Shocked? Angry? Confused? Yes to all three? Well, let me put your mind at ease and let you know that there are individuals, teams, and companies out there determined to fight subscription fees every step of the way. And they’re doing this by creating free applications that are competing with the big name applications (BNAs).

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

Finally, there’s another version of pay-to-use that’s even more devious: subscription software. You pay a one-time product initiation fee (typically large) to get the software and then you pay a weekly/monthly/yearly subscription fee (sometimes also referred to as a maintenance fee) to keep using the software.

Believe it or not, the current rumors buzzing around the technology world are that subscription software is the future of software: you won’t buy software anymore; you’ll “rent” it. Miss a payment and that software might very well uninstall itself from your computer, requiring a completely new purchase (with that large product initiation fee) before starting up with the subscription fees again.

Shocked? Angry? Confused? Yes to all three? Well, let me put your mind at ease and let you know that there are individuals, teams, and companies out there determined to fight subscription fees every step of the way. And they’re doing this by creating free applications that are competing with the big name applications (BNAs).

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

Friday, August 28, 2009

Ubuntu need hardware

First, the good news: every piece of software you’ll learn about in this book, including the Ubuntu operating system, is 100 percent free—free to download, free to install, and free to use. I might as well go ahead and say that the software is also free to uninstall, free to love or hate, free to complain about, and, of course, free to rave about to your friends and family.

And now the bad news: unless a major breakthrough in direct-to-brain downloading has occurred as you read this, that 100 percent free operating system and software will need a home. And that means a computer—a whirring, beeping, plugged-in personal computer (PC) that contains a few basic components that are absolutely required for you to download, store, and use the previously mentioned software. But there’s more good news. It is no longer mandatory that you spend a bundle of money to be able to install an operating system and all the software you know you’ll want to use. Let me explain.

In early 2007, Microsoft introduced its latest operating system, Vista, to the world. It then promptly informed everyone that running the operating system properly would require some hefty computer hardware requirements: more hard drive space than any previous operating system, more memory, and a much faster processor. And those requirements were the minimum just to run Vista; other limits existed. For example, if you wanted to have all the fancy new graphics features, you’d have to invest in a faster (and more expensive) video card. It wasn’t uncommon to find users spending $500 or more on hardware upgrades. And in many instances a completely new computer would need to be purchased if the user wanted to run Vista; older computers simply didn’t meet the requirements.

Have you had enough? Are you tired of spending dollar after dollar chasing the dream of the “perfect PC?” Are you looking for an inexpensive but scalable (upgradeable) computer that can provide you with basic services such as e-mail, word processing, and Internet browsing? And don’t forget other features, such as Internet messaging, VOIP (using your Internet connection to make phone calls), photo editing, and games. You shouldn’t have to skimp on any services or features. Does this sound like a computer you’d enjoy owning and using?

If so, today’s your lucky day. Because I’ll show you how easy it is to put together your own computer using inexpensive components. And because you won’t be spending any money on software, you’ll have the option to put some (or all) of those savings into your new computer. You might splurge and buy a bigger LCD panel (or a second LCD for multiple-monitor usage!) or add some more memory so you can run more applications at once. Or you can spend the bare minimum on hardware, keeping your expenditures low without skimping on software and services. (And if you want to get some more life out of your existing computer, I’ll explain how you can possibly give it a second life by installing Ubuntu to save even more money!)

Basic Components

You’ve probably heard the phrase, “Your mileage may vary,” and it’s no truer than when dealing with different computer hardware settings running Ubuntu (or any operating system). But when it comes to the Ubuntu operating system versus the Windows operating system, there is one large difference in hardware requirements: Ubuntu requires substantially less “oomph” when it comes to the basic components you need inside your computer. By this, I mean you don’t need the fastest processor, a huge amount of RAM memory, or even a large capacity hard drive.

The recommended Ubuntu hardware consists of the following:

• 700MHz x86 processor

• 384MB system memory (RAM)

• 8GB disk space

• Graphics card capable of 1024x768 resolution

• Sound card

• Network or Internet connection

Note that you don’t need an Intel 3GHz (gigahertz) Core 2 Duo processor with 2GB (gigabytes) of RAM and 500GB hard drive to install the Vista Ultimate operating system. You can install the latest version of Ubuntu on cutting-edge hardware found in a computer from 2000. Back in 2000, a good computer might typically come with a 60GB hard drive, 512MB or 1GB of RAM memory, and a Pentium 4 processor; as well as a built-in network card, video, and sound on the motherboard. Surprised?

This means that it’s possible for you to install Ubuntu on your current computer (or an older one you’ve packed away and hidden in a closet somewhere). Ubuntu doesn’t put a lot of demand on hardware, so you can use your current computer or build your own, but avoid the latest bleeding-edge technology (that also comes with a bleeding-edge price).

Not convinced? Okay, here’s where I put my money where my mouth is and show you just how easy it is to build a computer that will run Ubuntu and hundreds more applications for very little money. What’s even better is that I’m 99.9 percent certain that in five years this computer will most likely run the latest version of Ubuntu. Can you say that about your current computer and, say, Windows 2014 Home Edition?

In the introduction of this book, I mentioned that I wanted to build a basic computer that would satisfy a number of requirements:

• It must cost me less than $250.00.

• It must allow me to access the Internet.

• It must also let me access my e-mail, either via the Internet or stored on my hard drive.

• It must provide me with basic productivity features: word processor, spreadsheet, and slideshow-creation software.

• It must allow me to play music and create my own CDs or DVDs.

This list helps define the hardware I need to purchase to build my U-PC.

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

And now the bad news: unless a major breakthrough in direct-to-brain downloading has occurred as you read this, that 100 percent free operating system and software will need a home. And that means a computer—a whirring, beeping, plugged-in personal computer (PC) that contains a few basic components that are absolutely required for you to download, store, and use the previously mentioned software. But there’s more good news. It is no longer mandatory that you spend a bundle of money to be able to install an operating system and all the software you know you’ll want to use. Let me explain.

In early 2007, Microsoft introduced its latest operating system, Vista, to the world. It then promptly informed everyone that running the operating system properly would require some hefty computer hardware requirements: more hard drive space than any previous operating system, more memory, and a much faster processor. And those requirements were the minimum just to run Vista; other limits existed. For example, if you wanted to have all the fancy new graphics features, you’d have to invest in a faster (and more expensive) video card. It wasn’t uncommon to find users spending $500 or more on hardware upgrades. And in many instances a completely new computer would need to be purchased if the user wanted to run Vista; older computers simply didn’t meet the requirements.

Have you had enough? Are you tired of spending dollar after dollar chasing the dream of the “perfect PC?” Are you looking for an inexpensive but scalable (upgradeable) computer that can provide you with basic services such as e-mail, word processing, and Internet browsing? And don’t forget other features, such as Internet messaging, VOIP (using your Internet connection to make phone calls), photo editing, and games. You shouldn’t have to skimp on any services or features. Does this sound like a computer you’d enjoy owning and using?

If so, today’s your lucky day. Because I’ll show you how easy it is to put together your own computer using inexpensive components. And because you won’t be spending any money on software, you’ll have the option to put some (or all) of those savings into your new computer. You might splurge and buy a bigger LCD panel (or a second LCD for multiple-monitor usage!) or add some more memory so you can run more applications at once. Or you can spend the bare minimum on hardware, keeping your expenditures low without skimping on software and services. (And if you want to get some more life out of your existing computer, I’ll explain how you can possibly give it a second life by installing Ubuntu to save even more money!)

Basic Components

You’ve probably heard the phrase, “Your mileage may vary,” and it’s no truer than when dealing with different computer hardware settings running Ubuntu (or any operating system). But when it comes to the Ubuntu operating system versus the Windows operating system, there is one large difference in hardware requirements: Ubuntu requires substantially less “oomph” when it comes to the basic components you need inside your computer. By this, I mean you don’t need the fastest processor, a huge amount of RAM memory, or even a large capacity hard drive.

The recommended Ubuntu hardware consists of the following:

• 700MHz x86 processor

• 384MB system memory (RAM)

• 8GB disk space

• Graphics card capable of 1024x768 resolution

• Sound card

• Network or Internet connection

Note that you don’t need an Intel 3GHz (gigahertz) Core 2 Duo processor with 2GB (gigabytes) of RAM and 500GB hard drive to install the Vista Ultimate operating system. You can install the latest version of Ubuntu on cutting-edge hardware found in a computer from 2000. Back in 2000, a good computer might typically come with a 60GB hard drive, 512MB or 1GB of RAM memory, and a Pentium 4 processor; as well as a built-in network card, video, and sound on the motherboard. Surprised?

This means that it’s possible for you to install Ubuntu on your current computer (or an older one you’ve packed away and hidden in a closet somewhere). Ubuntu doesn’t put a lot of demand on hardware, so you can use your current computer or build your own, but avoid the latest bleeding-edge technology (that also comes with a bleeding-edge price).

Not convinced? Okay, here’s where I put my money where my mouth is and show you just how easy it is to build a computer that will run Ubuntu and hundreds more applications for very little money. What’s even better is that I’m 99.9 percent certain that in five years this computer will most likely run the latest version of Ubuntu. Can you say that about your current computer and, say, Windows 2014 Home Edition?

In the introduction of this book, I mentioned that I wanted to build a basic computer that would satisfy a number of requirements:

• It must cost me less than $250.00.

• It must allow me to access the Internet.

• It must also let me access my e-mail, either via the Internet or stored on my hard drive.

• It must provide me with basic productivity features: word processor, spreadsheet, and slideshow-creation software.

• It must allow me to play music and create my own CDs or DVDs.

This list helps define the hardware I need to purchase to build my U-PC.

Source of Information : Apress Ubuntu On A Dime The Path To Low Cost Computing

Thursday, August 27, 2009

Moonlight|3D—3-D Image Modeling

www.moonlight3d.eu

This last project looks really cool and impressed me, but I’m afraid documentation is nonexistent, so hopefully some of you folks at home can help these guys out. According to the Freshmeat page:

Moonlight|3D is a modeling and animation tool for three-dimensional art. It currently supports mesh-based modeling. It’s a redesign of Moonlight Atelier, formed after Moonlight Atelier/Creator died in 1999/2000. Rendering is done through pluggable back ends. It currently supports Sunflow, with support for RenderMan and others in planning.

The Web site sheds further light on the project, which states one of its goals as: “In order to speed up the progress of our development efforts, we open up the project to the general public, and we hope to attract the support of many developers and users, bringing the project forward faster.”

Installation. In terms of requirements requirements, the only thing I needed to install to get Moonlight running was Java, so thankfully, the dependencies are fairly minimal. As for choices of packages at the Web site, there’s a nightly build available as a binary or the latest source code (I ran with the binary). Grab the latest, extract it to a local folder, and open a terminal in the new folder. Then, enter the command:

$ ./moonlight.sh

Provided you have everything installed, it now should start. Once you’re inside, I’m sorry, I really can’t be of much help. There are the usual windows in a 3-D editor for height, width, depth and a 3-D view, and on the left are quick selection panes for objects, such as boxes, cones, spheres and so on (actually, the pane on the left has access to just about everything you need—it’s pretty cool). Scouting about, a number of cool functions really jumped out at me, like multiple preview modes; changeable light, camera sources and positions; and most important, the ability to make your own animations. If only I could find a way to use them.

This project really does look pretty cool, and it seems to be a decent alternative to programs like Blender, but there honestly is no documentation. All links to documentation lead to a page saying the documentation doesn’t exist yet and provides a link to the on-line forums. The forums also happen to have very little that’s of use to someone without any prior knowledge of the interface, and I assume all those already on the forum are users of the original Moonlight Atelier. Nevertheless, the project does look interesting and seems to be quite stable. I look forward to seeing what happens with this project once some documentation is in place.

Source of Information : Linux Journal Issue 181 May 2009

This last project looks really cool and impressed me, but I’m afraid documentation is nonexistent, so hopefully some of you folks at home can help these guys out. According to the Freshmeat page:

Moonlight|3D is a modeling and animation tool for three-dimensional art. It currently supports mesh-based modeling. It’s a redesign of Moonlight Atelier, formed after Moonlight Atelier/Creator died in 1999/2000. Rendering is done through pluggable back ends. It currently supports Sunflow, with support for RenderMan and others in planning.

The Web site sheds further light on the project, which states one of its goals as: “In order to speed up the progress of our development efforts, we open up the project to the general public, and we hope to attract the support of many developers and users, bringing the project forward faster.”

Installation. In terms of requirements requirements, the only thing I needed to install to get Moonlight running was Java, so thankfully, the dependencies are fairly minimal. As for choices of packages at the Web site, there’s a nightly build available as a binary or the latest source code (I ran with the binary). Grab the latest, extract it to a local folder, and open a terminal in the new folder. Then, enter the command:

$ ./moonlight.sh

Provided you have everything installed, it now should start. Once you’re inside, I’m sorry, I really can’t be of much help. There are the usual windows in a 3-D editor for height, width, depth and a 3-D view, and on the left are quick selection panes for objects, such as boxes, cones, spheres and so on (actually, the pane on the left has access to just about everything you need—it’s pretty cool). Scouting about, a number of cool functions really jumped out at me, like multiple preview modes; changeable light, camera sources and positions; and most important, the ability to make your own animations. If only I could find a way to use them.

This project really does look pretty cool, and it seems to be a decent alternative to programs like Blender, but there honestly is no documentation. All links to documentation lead to a page saying the documentation doesn’t exist yet and provides a link to the on-line forums. The forums also happen to have very little that’s of use to someone without any prior knowledge of the interface, and I assume all those already on the forum are users of the original Moonlight Atelier. Nevertheless, the project does look interesting and seems to be quite stable. I look forward to seeing what happens with this project once some documentation is in place.

Source of Information : Linux Journal Issue 181 May 2009

Wednesday, August 26, 2009

gipfel—Mountain Viewer/Locater

www.ecademix.com/JohannesHofmann/gipfel.html

This is definitely one of the most original and niche projects I’ve come across— and those two qualities are almost bound to get projects included in this section! gipfel has a unique application for mountain images and plotting. According to the Web site:

gipfel helps to find the names of mountains or points of interest on a picture. It uses a database containing names and GPS data. With the given viewpoint (the point from which the picture was taken) and two known mountains on the picture, gipfel can compute all parameters needed to compute the positions of other mountains on the picture. gipfel can also generate (stitch) panorama images.

Installation. A source tarball is available on the Web site, and trawling around the Net, I found a package from the ancient wonderland of Debian. But, the package is just as old and beardy as its parent OS. Installing gipfel’s source is a pretty basic process, so I went with the tarball. Once the contents are extracted and you have a terminal open in the new directory, it needs only the usual:

$ ./configure

$ make

And, as sudo or root:

# make install

However, like most niche projects, it does have a number of slightly obscure requirements that probably aren’t installed on your system (the configure script will inform you). The Web site gives the following requirements:

• UNIX-like system (for example, Linux, *BSD)

• fltk-1.1

• gsl (GNU Scientific Library)

• libtiff

I found I needed to install fltk-1.1-dev and libgsl0-dev to get past ./configure (you probably need the -dev package for libtiff installed too, but I already had that installed from a previous project). Once compilation has finished and the install script has done its thing, you can start the program with:

$ gipfel

Usage Once you’re inside, the first thing you’ll need to do is load a picture of mountains (and a word of warning, it only accepts .jpg files, so convert whatever you have if it isn’t

already a .jpg). Once the image is loaded, you either can choose a viewpoint from a predefined set of locations, such as Everest Base Camp and so on, or enter the coordinates manually. However, I couldn’t wrap my head around the interface for manual entry, and as Johannes Hofmann says on his own page:

...gipfel also can be used to play around with the parameters manually. But be warned: it is pretty difficult to find the right parameters for a given picture manually. You can think of gipfel as a georeferencing software for arbitrary images (not only satellite images or maps).

As a result, Johannes recommends the Web site www.alpin-koordinaten.de as a great place for getting GPS locations, but bear in mind that the site is in German, und mein Deutsch ist nicht so gut, so you may need to run a Web translator. If you’re lucky enough to get a range of reference points appearing on your image, you can start to manipulate where they land on your picture according to perspective, as overwhelming chance dictates that the other mountain peaks won’t line up immediately and, therefore, will require tweaking.

If you look at the controls, such as the compass bearing, focal length, tilt and so on, these will start to move the reference points around while still connecting them as a body of points. Provided you have the right coordinates for your point of view, the reference points should line up, along with information on all the other peaks with it (which is really what the project is for in the first place). gipfel also has an image stitching mode, which allows you to generate panoramic images from multiple images that have been referenced with gipfel. As my attempts with gipfel didn’t turn out so well, I include a shot of Johannes’ stunning results achieved from Lempersberg to Zugspitze in the Bavarian Alps, as well as one of the epic panoramic shots as shown on the Web site. Although this project is still a bit unwieldy, it is still in development, and you have to hand it to gipfel, it is certainly original.

Source of Information : Linux Journal Issue 181 May 2009

This is definitely one of the most original and niche projects I’ve come across— and those two qualities are almost bound to get projects included in this section! gipfel has a unique application for mountain images and plotting. According to the Web site:

gipfel helps to find the names of mountains or points of interest on a picture. It uses a database containing names and GPS data. With the given viewpoint (the point from which the picture was taken) and two known mountains on the picture, gipfel can compute all parameters needed to compute the positions of other mountains on the picture. gipfel can also generate (stitch) panorama images.

Installation. A source tarball is available on the Web site, and trawling around the Net, I found a package from the ancient wonderland of Debian. But, the package is just as old and beardy as its parent OS. Installing gipfel’s source is a pretty basic process, so I went with the tarball. Once the contents are extracted and you have a terminal open in the new directory, it needs only the usual:

$ ./configure

$ make

And, as sudo or root:

# make install

However, like most niche projects, it does have a number of slightly obscure requirements that probably aren’t installed on your system (the configure script will inform you). The Web site gives the following requirements:

• UNIX-like system (for example, Linux, *BSD)

• fltk-1.1

• gsl (GNU Scientific Library)

• libtiff

I found I needed to install fltk-1.1-dev and libgsl0-dev to get past ./configure (you probably need the -dev package for libtiff installed too, but I already had that installed from a previous project). Once compilation has finished and the install script has done its thing, you can start the program with:

$ gipfel

Usage Once you’re inside, the first thing you’ll need to do is load a picture of mountains (and a word of warning, it only accepts .jpg files, so convert whatever you have if it isn’t

already a .jpg). Once the image is loaded, you either can choose a viewpoint from a predefined set of locations, such as Everest Base Camp and so on, or enter the coordinates manually. However, I couldn’t wrap my head around the interface for manual entry, and as Johannes Hofmann says on his own page:

...gipfel also can be used to play around with the parameters manually. But be warned: it is pretty difficult to find the right parameters for a given picture manually. You can think of gipfel as a georeferencing software for arbitrary images (not only satellite images or maps).

As a result, Johannes recommends the Web site www.alpin-koordinaten.de as a great place for getting GPS locations, but bear in mind that the site is in German, und mein Deutsch ist nicht so gut, so you may need to run a Web translator. If you’re lucky enough to get a range of reference points appearing on your image, you can start to manipulate where they land on your picture according to perspective, as overwhelming chance dictates that the other mountain peaks won’t line up immediately and, therefore, will require tweaking.

If you look at the controls, such as the compass bearing, focal length, tilt and so on, these will start to move the reference points around while still connecting them as a body of points. Provided you have the right coordinates for your point of view, the reference points should line up, along with information on all the other peaks with it (which is really what the project is for in the first place). gipfel also has an image stitching mode, which allows you to generate panoramic images from multiple images that have been referenced with gipfel. As my attempts with gipfel didn’t turn out so well, I include a shot of Johannes’ stunning results achieved from Lempersberg to Zugspitze in the Bavarian Alps, as well as one of the epic panoramic shots as shown on the Web site. Although this project is still a bit unwieldy, it is still in development, and you have to hand it to gipfel, it is certainly original.

Source of Information : Linux Journal Issue 181 May 2009

Tuesday, August 25, 2009

NON-LINUX FOSS - ReactOS Remote Desktop

If you’re a Linux fan, there’s a bit of a tendency to think that Linux and open source are two ways of saying the same thing. However, plenty of FOSS projects exist that don’t have anything to do with Linux, and plenty of projects originated on Linux that now are available on other systems. Because a fair share of our readers also use one of those other operating systems, willingly or unwillingly, we thought we’d highlight here in the coming months some of the FOSS projects that fall into the above categories. We probably all know about our BSD brethren: FreeBSD, OpenBSD, NetBSD and so on, but how many of us know about ReactOS? ReactOS is an open-source replacement for Windows XP/2003. Don’t confuse this with something like Wine, which allows you to run Windows programs on Linux. ReactOS is a full-up replacement for Windows XP/2003. Assuming you consider that good news (a FOSS replacement for Windows), the bad news is that it’s still only alpha software. However, the further good news is that it still is under active development; the most recent release at the time of this writing is 0.3.8, dated February 4, 2009. For more information, visit www.reactos.org.

Widelands—Real-Time Strategy

xoops.widelands.org

I covered this game only briefly in the Projects at a Glance section in last month’s issue, so I’m taking a closer look at it this month. Widelands is a real-time strategy (RTS) game built on the SDL libraries and is inspired by The Settlers games from the early and mid-1990s. The Settlers I and II games were made in a time when the RTS genre was still in its relative infancy, so they had different gameplay ideals from their hyperspeed cousins, where a single map could take up to 50 hours of gameplay.

Thankfully, Widelands has retained this ideal, where frantic “tank-rush” tactics do not apply. Widelands takes a much slower pace, with an emphasis not on combat, but on building your home base. And, although the interface is initially hard to penetrate, it does lend itself to more advanced elements of base building, with gameplay mechanics that seem to hinge on not necessarily what is constructed, but how it is constructed. For instance, the ground is often angled. So, when you build roads, you have to take into account where they head in order for builders to be able to transport their goods quickly and easily. Elements such as flow are just about everything in this game— you almost could call it feng shui.

Installation. If you head to the Web site’s Downloads section, there’s an i386 Linux binary available in a tarball that’s around 100MB, which I’ll be running with here. For masochists (or non-Intel machines), the game’s source is available farther down the page.

Download the package and extract it to a new folder (which you’ll need to make yourself). Open a terminal in the new folder, and enter the command:

$ ./widelands

If you’re very lucky, it’ll work right off the bat. Chances are, you’ll get an error like this:

./widelands: error while loading shared libraries: libSDL_ttf-2.0.so.0: cannot open shared object file: No such file or directory I installed libSDL_ttf-2.0-dev, which fixed that, but then I got several other errors before I could get it to start. I had to install libSDL_gfx.so.4 and libsdl-gfx1.2-4 before it worked, but Widelands relies heavily on SDL (as do many other games), so you might as well install all of the SDL libraries while you’re there.

Usage. Once you’re in the game, the first thing you should do is head to the Single Player mode, and choose Campaign to start, as there’s a good tutorial, which you will need. While the levels are loading, hints are given to you for when you get in the game, speeding up the learning process. Controls are with the mouse and keyboard. The mouse is used for choosing various actions on-screen, and the keyboard’s arrow keys let you move the camera around the world. Left-clicking on an insignificant piece of map brings up a menu for all of the basic in-game options. Right-clicking on something usually gets rid of it.

From here on, the game is far too complex to explain in this amount of space, but it’s well worth checking out the documentation and help screens for further information. Once you’ve finished the intro campaign, check out the game’s large collection of singleand multiplayer maps. You get a choice of multiple races, including Barbarians, Empire and Atlanteans, coupled with the ability to play against the computer or against other humans (or a close approximation). It also comes with a background story to the game, and if you spend your Saturday nights playing World of Warcraft instead of going to the pub, I’m sure you’ll find it very interesting.

Delve into this game, and there’s much that lies beneath the surface. It has simple things that please, like how the in-game menus are very sophisticated and solid, with none of the bugginess you get in many amateur games. But, it’s the complete reversal of hyperspeed in its gameplay that I really love. I always want to get back to building my base when playing most RTS games, but I’m constantly drawn away by fire fights. This game lets you keep building, and places serious emphasis on how you do it.

The Web site also has add-ons, such as maps, music and other tribes, along with an editor, artwork and more, so check it out. Ultimately, Widelands is a breath of fresh air in an extremely stale genre, whose roots ironically stem from way back in the past in RTS history. Whether you’re chasing a fix of that original Settlers feel or just want a different direction in RTS, this game is well worth a look.

Source of Information : Linux Journal Issue 181 May 2009

I covered this game only briefly in the Projects at a Glance section in last month’s issue, so I’m taking a closer look at it this month. Widelands is a real-time strategy (RTS) game built on the SDL libraries and is inspired by The Settlers games from the early and mid-1990s. The Settlers I and II games were made in a time when the RTS genre was still in its relative infancy, so they had different gameplay ideals from their hyperspeed cousins, where a single map could take up to 50 hours of gameplay.

Thankfully, Widelands has retained this ideal, where frantic “tank-rush” tactics do not apply. Widelands takes a much slower pace, with an emphasis not on combat, but on building your home base. And, although the interface is initially hard to penetrate, it does lend itself to more advanced elements of base building, with gameplay mechanics that seem to hinge on not necessarily what is constructed, but how it is constructed. For instance, the ground is often angled. So, when you build roads, you have to take into account where they head in order for builders to be able to transport their goods quickly and easily. Elements such as flow are just about everything in this game— you almost could call it feng shui.

Installation. If you head to the Web site’s Downloads section, there’s an i386 Linux binary available in a tarball that’s around 100MB, which I’ll be running with here. For masochists (or non-Intel machines), the game’s source is available farther down the page.

Download the package and extract it to a new folder (which you’ll need to make yourself). Open a terminal in the new folder, and enter the command:

$ ./widelands

If you’re very lucky, it’ll work right off the bat. Chances are, you’ll get an error like this:

./widelands: error while loading shared libraries: libSDL_ttf-2.0.so.0: cannot open shared object file: No such file or directory I installed libSDL_ttf-2.0-dev, which fixed that, but then I got several other errors before I could get it to start. I had to install libSDL_gfx.so.4 and libsdl-gfx1.2-4 before it worked, but Widelands relies heavily on SDL (as do many other games), so you might as well install all of the SDL libraries while you’re there.

Usage. Once you’re in the game, the first thing you should do is head to the Single Player mode, and choose Campaign to start, as there’s a good tutorial, which you will need. While the levels are loading, hints are given to you for when you get in the game, speeding up the learning process. Controls are with the mouse and keyboard. The mouse is used for choosing various actions on-screen, and the keyboard’s arrow keys let you move the camera around the world. Left-clicking on an insignificant piece of map brings up a menu for all of the basic in-game options. Right-clicking on something usually gets rid of it.

From here on, the game is far too complex to explain in this amount of space, but it’s well worth checking out the documentation and help screens for further information. Once you’ve finished the intro campaign, check out the game’s large collection of singleand multiplayer maps. You get a choice of multiple races, including Barbarians, Empire and Atlanteans, coupled with the ability to play against the computer or against other humans (or a close approximation). It also comes with a background story to the game, and if you spend your Saturday nights playing World of Warcraft instead of going to the pub, I’m sure you’ll find it very interesting.

Delve into this game, and there’s much that lies beneath the surface. It has simple things that please, like how the in-game menus are very sophisticated and solid, with none of the bugginess you get in many amateur games. But, it’s the complete reversal of hyperspeed in its gameplay that I really love. I always want to get back to building my base when playing most RTS games, but I’m constantly drawn away by fire fights. This game lets you keep building, and places serious emphasis on how you do it.

The Web site also has add-ons, such as maps, music and other tribes, along with an editor, artwork and more, so check it out. Ultimately, Widelands is a breath of fresh air in an extremely stale genre, whose roots ironically stem from way back in the past in RTS history. Whether you’re chasing a fix of that original Settlers feel or just want a different direction in RTS, this game is well worth a look.

Source of Information : Linux Journal Issue 181 May 2009

Monday, August 24, 2009

The Future of AppArmor

AppArmor has been adopted as the default Mandatory Access Control solution for both the Ubuntu and Mandriva distributions. I’ve sung its praises before, and as evidenced by writing my now third column about it, clearly I’m still a fan.

But, you should know that AppArmor’s future is uncertain. In late 2007, Novell laid off its full-time AppArmor developers, including project founder Crispin Cowan (who subsequently joined Microsoft).

Thus, Novell’s commitment to AppArmor is open to question. It doesn’t help that the AppArmor Development Roadmap on Novell’s Web site hasn’t been updated since 2006, or that Novell hasn’t released a new version of AppArmor since 2.3 Beta 1 in July 2008, nearly a year ago at the time of this writing.

But, AppArmor’s source code is GPL’d: with any luck, this apparent slack in AppArmor leadership soon will be taken up by some other concerned party—for example, Ubuntu and Mandriva developers. By incorporating AppArmor into their respective distributions, the Ubuntu and Mandriva teams have both committed to at least patching AppArmor against the inevitable bugs that come to light in any major software package.

Given this murky future, is it worth the trouble to use AppArmor? My answer is an emphatic yes, for a very simple reason: AppArmor is so easy to use—requiring no effort for packages already having distribution provided profiles and minimal effort to create new profiles—that there’s no reason not to take advantage of it for however long it remains an officially supported part of your SUSE, Ubuntu or Mandriva system.

Source of Information : Linux Journal 185 September 2009

But, you should know that AppArmor’s future is uncertain. In late 2007, Novell laid off its full-time AppArmor developers, including project founder Crispin Cowan (who subsequently joined Microsoft).

Thus, Novell’s commitment to AppArmor is open to question. It doesn’t help that the AppArmor Development Roadmap on Novell’s Web site hasn’t been updated since 2006, or that Novell hasn’t released a new version of AppArmor since 2.3 Beta 1 in July 2008, nearly a year ago at the time of this writing.

But, AppArmor’s source code is GPL’d: with any luck, this apparent slack in AppArmor leadership soon will be taken up by some other concerned party—for example, Ubuntu and Mandriva developers. By incorporating AppArmor into their respective distributions, the Ubuntu and Mandriva teams have both committed to at least patching AppArmor against the inevitable bugs that come to light in any major software package.

Given this murky future, is it worth the trouble to use AppArmor? My answer is an emphatic yes, for a very simple reason: AppArmor is so easy to use—requiring no effort for packages already having distribution provided profiles and minimal effort to create new profiles—that there’s no reason not to take advantage of it for however long it remains an officially supported part of your SUSE, Ubuntu or Mandriva system.

Source of Information : Linux Journal 185 September 2009

Sunday, August 23, 2009

AppArmor on Ubuntu

In SUSE’s and Ubuntu’s AppArmor implementations, AppArmor comes with an assortment of pretested profiles for popular server and client applications and with simple tools for creating your own AppArmor profiles. On Ubuntu systems, most of the pretested profiles are enabled by default. There’s nothing you need to do to install or enable them. Other Ubuntu AppArmor profiles are installed, but set to run in complain mode, in which AppArmor only logs unexpected application behavior to /var/log/messages rather than both blocking and logging it. You either can leave them that way, if you’re satisfied with just using AppArmor as a watchdog for those applications (in which case, you should keep an eye on /var/log/messages), or you can switch them to enforce mode yourself, although, of course, you should test thoroughly first.

Still other profiles are provided by Ubuntu’s optional apparmor-profiles package. Whereas ideally a given AppArmor profile should be incorporated into its target application’s package, for now at least, apparmor-profiles is sort of a catchall for emerging and not-quite-stable profiles that, for whatever reason, aren’t appropriate to bundle with their corresponding packages. Active AppArmor profiles reside in /etc/apparmor.d. The files at the root of this directory are parsed and loaded at boot time automatically. The apparmor-profiles package installs some of its profiles there, but puts experimental profiles in /usr/share/doc/apparmor-profiles/extras.

The Ubuntu 9.04 packages put corresponding profiles into /etc/apparmor.d. If you install the package apparmor-profiles, you’ll additionally get default protection for the packages shown. The lists in Tables 1 and 2 are perhaps as notable for what they lack as for what they include. Although such high-profile server applications as BIND, MySQL, Samba, NTPD and CUPS are represented, very notably absent are Apache, Postfix, Sendmail, Squid and SSHD. And, what about important client-side network tools like Firefox, Skype, Evolution, Acrobat and Opera? Profiles for those applications and many more are provided by apparmor-profiles in /usr/share/doc/apparmor-profiles/extras, but because they reside there rather than /etc/apparmor.d, they’re effectively disabled. These profiles are disabled either because they haven’t yet been updated to work with the latest version of whatever package they protect or because they don’t yet provide enough protection relative to the Ubuntu AppArmor team’s concerns about their stability. Testing and tweaking such profiles is beyond the scope of this article, but suffice it to say, it involves the logprof command.

Source of Information : Linux Journal 185 September 2009

Still other profiles are provided by Ubuntu’s optional apparmor-profiles package. Whereas ideally a given AppArmor profile should be incorporated into its target application’s package, for now at least, apparmor-profiles is sort of a catchall for emerging and not-quite-stable profiles that, for whatever reason, aren’t appropriate to bundle with their corresponding packages. Active AppArmor profiles reside in /etc/apparmor.d. The files at the root of this directory are parsed and loaded at boot time automatically. The apparmor-profiles package installs some of its profiles there, but puts experimental profiles in /usr/share/doc/apparmor-profiles/extras.

The Ubuntu 9.04 packages put corresponding profiles into /etc/apparmor.d. If you install the package apparmor-profiles, you’ll additionally get default protection for the packages shown. The lists in Tables 1 and 2 are perhaps as notable for what they lack as for what they include. Although such high-profile server applications as BIND, MySQL, Samba, NTPD and CUPS are represented, very notably absent are Apache, Postfix, Sendmail, Squid and SSHD. And, what about important client-side network tools like Firefox, Skype, Evolution, Acrobat and Opera? Profiles for those applications and many more are provided by apparmor-profiles in /usr/share/doc/apparmor-profiles/extras, but because they reside there rather than /etc/apparmor.d, they’re effectively disabled. These profiles are disabled either because they haven’t yet been updated to work with the latest version of whatever package they protect or because they don’t yet provide enough protection relative to the Ubuntu AppArmor team’s concerns about their stability. Testing and tweaking such profiles is beyond the scope of this article, but suffice it to say, it involves the logprof command.

Source of Information : Linux Journal 185 September 2009

Saturday, August 22, 2009

AppArmor Review

AppArmor is based on the Linux Security Modules (LSMs), as is SELinux. AppArmor, however, provides only a subset of the controls SELinux provides. Whereas SELinux has methods for Type Enforcement (TE), Role-Based Access Controls (RBACs) and Multi Level Security (MLS), AppArmor provides only a form of Type Enforcement. Type Enforcement involves confining a given application to a specific set of actions, such as writing to Internet network sockets, reading a specific file and so forth. RBAC involves restricting user activity based on the defined role, and MLS involves limiting access to a given resource based on its data classification (or label). By focusing on Type Enforcement, AppArmor provides protection against, arguably, the most common Linux attack scenario—the possibility of an attacker exploiting vulnerabilities in a given application that allows the attacker to perform activities not intended by the application’s developer or administrator. By creating a baseline of expected application behavior and blocking all activity that falls outside that baseline, AppArmor (potentially) can mitigate even zero-day (unpatched) software vulnerabilities. What AppArmor cannot do, however, is prevent abuse of an application’s intended functionality. For example, the Secure Shell dæmon, SSHD, is designed to grant shell access to remote users. If an attacker figures out how to break SSHD’s authentication by, for example, entering just the right sort of gibberish in the user name field of an SSH login session, resulting in SSHD giving the attacker a remote shell as some authorized user, AppArmor may very well allow the attack to proceed, as the attack’s outcome is perfectly consistent with what SSHD would be expected to do after successful login. If, on the other hand, an attacker figured out how to make the CUPS print services dæmon add a line to /etc/passwd that effectively creates a new user account, AppArmor could prevent that attack from succeeding, because CUPS has no reason to be able to write to the file /etc/passwd.

Source of Information : Linux Journal 185 September

Source of Information : Linux Journal 185 September

Friday, August 21, 2009

Why Buy a $350 Thin Client?

On August 10, 2009, I’ll be at a conference in Troy, Michigan, put on by the LTSP (Linux Terminal Server Project, www.ltsp.org) crew and their commercial company (www.disklessworkstations.com). The mini-conference is geared toward people considering thin-client computing for their network. My talk will be targeting education, as that’s where I have the most experience.

One of the issues network administrators need to sort out is whether a decent thin client, which costs around $350, is worth the money when full-blown desktops can be purchased for a similar investment. As with most good questions, there’s really not only one answer. Thankfully, LTSP is very flexible with the clients it supports, so whatever avenue is chosen, it usually works well. Some of the advantages of actual thin-client devices are:

1. Setup time is almost zero. The thin clients are designed to be unboxed and turned on.

2. Because modern thin clients have no moving parts, they very seldom break down and tend to use much less electricity compared to desktop machines.

3. Top-of-the-line thin clients have sufficient specs to support locally running applications, which takes load off the server without sacrificing ease of installation.

4. They look great. There are some advantages to using full desktop machines as thin clients too, and it’s possible they will be the better solution for a given install:

1. Older desktops often can be revitalized as thin clients. Although a 500MHz computer is too slow to be a decent workstation, it can make a very viable thin client.

2. Netbooks like the Eee PC can be used as thin clients and then used as notebook computers on the go. It makes for a slightly inconvenient desktop setup, but if mobility is important, it might be ideal for some situations.

3. It’s easy to get older computers for free. Even with the disadvantages that come with using old hardware, it’s hard to beat free.

Thankfully, with the flexibility of LTSP, any combination of thin clients can coexist in the same network. If you’re looking for a great way to manage lots of client computers, the Linux Terminal Server Project might be exactly what you need. I know I couldn’t do my job without it.

One of the issues network administrators need to sort out is whether a decent thin client, which costs around $350, is worth the money when full-blown desktops can be purchased for a similar investment. As with most good questions, there’s really not only one answer. Thankfully, LTSP is very flexible with the clients it supports, so whatever avenue is chosen, it usually works well. Some of the advantages of actual thin-client devices are:

1. Setup time is almost zero. The thin clients are designed to be unboxed and turned on.

2. Because modern thin clients have no moving parts, they very seldom break down and tend to use much less electricity compared to desktop machines.

3. Top-of-the-line thin clients have sufficient specs to support locally running applications, which takes load off the server without sacrificing ease of installation.

4. They look great. There are some advantages to using full desktop machines as thin clients too, and it’s possible they will be the better solution for a given install:

1. Older desktops often can be revitalized as thin clients. Although a 500MHz computer is too slow to be a decent workstation, it can make a very viable thin client.

2. Netbooks like the Eee PC can be used as thin clients and then used as notebook computers on the go. It makes for a slightly inconvenient desktop setup, but if mobility is important, it might be ideal for some situations.

3. It’s easy to get older computers for free. Even with the disadvantages that come with using old hardware, it’s hard to beat free.

Thankfully, with the flexibility of LTSP, any combination of thin clients can coexist in the same network. If you’re looking for a great way to manage lots of client computers, the Linux Terminal Server Project might be exactly what you need. I know I couldn’t do my job without it.

Thursday, August 20, 2009

NON-LINUX FOSS - Moonlight

Moonlight is an open-source implementation of Microsoft’s Silverlight. In case you’re

not familiar with Silverlight, it’s a Web browser plugin that runs rich Internet applications. It provides features such as animation, audio/video playback and vector graphics.

Moonlight programming is done with any of the languages compatible with the Mono runtime environment. Among many others, these languages include C#, VB.NET and Python. Mono, of course, is a multiplatform implementation of ECMA’s Common Language Infrastructure (CLI), aka the .NET environment. A technical collaboration deal between Novell and Microsoft has provided Moonlight with access to Silverlight test suites and gives Moonlight users access to licensed media codecs for video and audio. Moonlight currently supplies stable support for Silverlight 1.0 and Alpha support for Silverlight 2.0.

Source of Information : Linux Journal 185 September 2009

not familiar with Silverlight, it’s a Web browser plugin that runs rich Internet applications. It provides features such as animation, audio/video playback and vector graphics.

Moonlight programming is done with any of the languages compatible with the Mono runtime environment. Among many others, these languages include C#, VB.NET and Python. Mono, of course, is a multiplatform implementation of ECMA’s Common Language Infrastructure (CLI), aka the .NET environment. A technical collaboration deal between Novell and Microsoft has provided Moonlight with access to Silverlight test suites and gives Moonlight users access to licensed media codecs for video and audio. Moonlight currently supplies stable support for Silverlight 1.0 and Alpha support for Silverlight 2.0.

Source of Information : Linux Journal 185 September 2009

Wednesday, August 19, 2009

gWaei—Japanese-English Dictionary

gwaei.sourceforge.net

gwaei.sourceforge.netStudents of Japanese have had a number of tools available for Linux for sometime, but here’s a project that updates the situation and brings several elements together from other projects to form one sleek application. In the words of the gWaei Web site:

gWaei is a Japanese-English dictionary program for the GNOME desktop. It is made to be a modern drop-in replacement for Gjiten with many of the same features. The dictionary files it uses are from Jim Breen’s WWWJDIC Project and are installed separately through the program.

It features the following:

• Easy dictionary installation with a click of a button.

• Support for searching using regular expressions.

• Streams results so the interface is never frozen.

• Click Kanji in the results pane to look at information on it.

• Simple interface that makes sense.

• Intelligent design and Tab switches dictionaries.

• Organizes relevant matches to the top of the results.

Installation If you head to the Web site’s download section, there are gWaei packages available in deb, RPM and source tarball format. For me, the deb installed with no problems, so I ran with that. When running with the source version, I couldn’t find all of the dependencies, but the Web site says you need the following packages, along with their -dev counterparts: gtk+-2.0, gconf-2.0, libcurl, libgnome-2.0 and libsexy. The documentation also says that compiling the source is the standard fare of:

$ ./configure

$ make

$ sudo make install

After installation, I found gWaei in my menu under Applications -> Utilities -> gWaei Japanese-English Dictionary. If you can’t find gWaei in your menu, enter the command:

$ gwaei

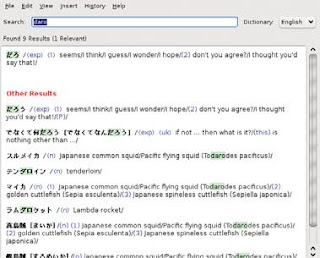

Usage Once gWaei starts, the first thing you see is a Settings window that’s broken into three tabs: Status, Install Dictionaries and Advanced. Status tells you how things are currently set up, and to start off with, all you’ll see is Disabled. Click the Install Dictionaries tab, and you’ll see that there are buttons already set up to install new dictionaries, called Add, for English, Kanji, Names and Radicals. Once these are all installed, each of them will be changed to Enabled back in the Status tab. After these are installed, click Close, and you are in the program. The first place you should go is the search bar.

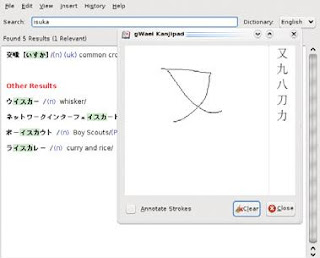

Enter something in English or in Romaji (Japanese with the Latin alphabet we use), and meanings and translations appear in the large field below with a probable mix of kanji and kana, and an English translation. You also can enter searches in kana and kanji, but my brother has my Japanese keyboard, so I couldn’t really try it out. For a really cool feature, click Insert -> Using Kanjipad, and a blank page comes up where you can draw kanji characters by hand with your mouse. Various kanji characters then appear on the right and update, depending on how many strokes you make and their shape. If you click Insert -> Using Radical Search Tool, you can search for radicals on basic kanji characters, which also can be restricted by the number of strokes. All in all, gWaei is a great program with elegant simplicity, and it has the features you need, whether you’re in Japan or the West (or anywhere else that’s not Japan for that matter). If you’re a Japanese student, this should be standard issue in your arsenal.

The coolest feature in gWaei is this kanji pad,

The coolest feature in gWaei is this kanji pad,where you can draw kanji with your mouse,

and the computer dynamically alters the

selection based on your strokes.

Source of Information : CPU Magazine 07 2009

Tuesday, August 18, 2009

NON-LINUX FOSS

In our second Upfront installment highlighting non-Linux FOSS projects, we present SharpDevelop. SharpDevelop (aka #Develop) is an IDE for developing .NET applications in C#, F#, VB.NET, Boo and IronPython. SharpDevelop includes all the stuff you’d expect in a modern IDE: syntax highlighting, refactoring, forms designer, debugger, unit testing, code coverage, Subversion support and so on. It runs on all modern versions of the Windows platform. SharpDevelop is a “real” FOSS project; it’s not controlled by any big sinister corporation (and we all know who I’m talking about). It has an active community and is actively upgraded. At the time of this writing, version 3.0 just recently has been released. Even if you use only Linux, you may be indirectly using SharpDevelop. If you use any Mono programs, they probably were developed using the MonoDevelop IDE. MonoDevelop was forked from SharpDevelop in 2003 and ported to GTK.

Source of Information : Linux Journal Issue 182 June 2009

SharpDevelop Running on Vista (from www.icsharpcode.net)

Source of Information : Linux Journal Issue 182 June 2009

Monday, August 17, 2009

WHAT’S NEW IN KERNEL DEVELOPMENT

An effort to change the license on a piece of code hit a wall recently. Mathieu Desnoyers wanted to migrate from the GPL to the LGPL on some userspace RCU code. Read-Copy Update is a way for the kernel to define the elements of a data object, without other running code seeing the object in the process of formation. Mathieu’s userspace version provides the same service for user programs. Unfortunately, even aside from the usual issue of needing permission from all contributors to change the license of their contribution, it turns out that IBM owns the patent to some of the RCU code concepts, and it has licensed the patent for use only in GPLed software. So, without permission from IBM, Mathieu can get permission from all the contributors he wants and still be stuck with the GPL.

Loadlin is back in active development! The venerable tool boots Linux from a directory tree in a DOS partition, so all of us DOS users can experiment with this new-fangled Linux thing. To help us with that, Samuel Thibault has released Loadlin version 1.6d and has taken over from Hans Lerman as official maintainer of the code. The new version works with the latest Linux kernels and can load up to a 200MB bzImage. He’s also migrated development into a mercurial repository. (Although not as popular as git with kernel developers, mercurial does seem to have a loyal following, and there’s even a book available at hgbook.red-bean.com.) After seven years of sleep, here’s hoping Loadlin has a glorious new youth, with lots of new features and fun. It loads Linux from DOS! How cool is that?

Hirofumi Ogawa has written a driver for Microsoft’s exFAT filesystem, for use with large removable Flash drives. The driver is read-only, based on reverse-engineering the filesystem on disk. There doesn’t seem to be immediate plans to add write support, but that could change in a twinkling, if a developer with one of those drives takes an interest in the project. Hirofumi has said he may not have time to continue work on the driver himself. Meanwhile, Boaz Harross has updated the exofs filesystem. Exofs supports Object Storage Devices (OSDs), a type of drive that implements normal block device semantics, while at the same time providing access to data in the form of objects defined within other objects. This higher-level view of data makes it easier to implement fine-grained data management and security. Boaz’s updates include some ext2 fixes that still apply to the exofs codebase, as exofs originally was an ext2 fork. He also abandoned the IBM API in favor of supporting the open-osd API instead. Adrian McMenamin has posted a driver for the VMUFAT filesystem, the SEGA Dreamcast filesystem running on the Dreamcast visual memory unit. Using his driver, he was able to manage data directly on the Dreamcast. At the moment, the driver code does seem to have some bugs, and other problems were pointed out by various people. Adrian has been inspired to do a more intense rewrite of the code, which he intends to submit a bit later than he’d first anticipated. A new source of controversy has emerged in Linux kernel development. With the advent of pocket devices that are intended to power down when not in use, or at least go into some kind of power-saving state, the whole idea of suspending to disk and suspending to RAM has become more complicated. It’s not obvious whether the kernel or userspace should be concerned with analyzing the sleep-worthiness of the various parts of the system, or how much the responsibility should be shared between them. There seems to be many opinions, all of which rest on everyone’s idea of what is appropriate as well as on what is feasible. The kernel is supposed to control all hardware, but the X Window System controls hardware and is not part of the kernel. So, clearly, exceptions exist to any general principles that might be involved. Ultimately, if no obvious delineation of responsibility emerges, it’s possible folks may start working on competing ideas, like what happened initially with software suspend itself.

Source of Information : Linux Journal Issue 182 June 2009

Loadlin is back in active development! The venerable tool boots Linux from a directory tree in a DOS partition, so all of us DOS users can experiment with this new-fangled Linux thing. To help us with that, Samuel Thibault has released Loadlin version 1.6d and has taken over from Hans Lerman as official maintainer of the code. The new version works with the latest Linux kernels and can load up to a 200MB bzImage. He’s also migrated development into a mercurial repository. (Although not as popular as git with kernel developers, mercurial does seem to have a loyal following, and there’s even a book available at hgbook.red-bean.com.) After seven years of sleep, here’s hoping Loadlin has a glorious new youth, with lots of new features and fun. It loads Linux from DOS! How cool is that?

Hirofumi Ogawa has written a driver for Microsoft’s exFAT filesystem, for use with large removable Flash drives. The driver is read-only, based on reverse-engineering the filesystem on disk. There doesn’t seem to be immediate plans to add write support, but that could change in a twinkling, if a developer with one of those drives takes an interest in the project. Hirofumi has said he may not have time to continue work on the driver himself. Meanwhile, Boaz Harross has updated the exofs filesystem. Exofs supports Object Storage Devices (OSDs), a type of drive that implements normal block device semantics, while at the same time providing access to data in the form of objects defined within other objects. This higher-level view of data makes it easier to implement fine-grained data management and security. Boaz’s updates include some ext2 fixes that still apply to the exofs codebase, as exofs originally was an ext2 fork. He also abandoned the IBM API in favor of supporting the open-osd API instead. Adrian McMenamin has posted a driver for the VMUFAT filesystem, the SEGA Dreamcast filesystem running on the Dreamcast visual memory unit. Using his driver, he was able to manage data directly on the Dreamcast. At the moment, the driver code does seem to have some bugs, and other problems were pointed out by various people. Adrian has been inspired to do a more intense rewrite of the code, which he intends to submit a bit later than he’d first anticipated. A new source of controversy has emerged in Linux kernel development. With the advent of pocket devices that are intended to power down when not in use, or at least go into some kind of power-saving state, the whole idea of suspending to disk and suspending to RAM has become more complicated. It’s not obvious whether the kernel or userspace should be concerned with analyzing the sleep-worthiness of the various parts of the system, or how much the responsibility should be shared between them. There seems to be many opinions, all of which rest on everyone’s idea of what is appropriate as well as on what is feasible. The kernel is supposed to control all hardware, but the X Window System controls hardware and is not part of the kernel. So, clearly, exceptions exist to any general principles that might be involved. Ultimately, if no obvious delineation of responsibility emerges, it’s possible folks may start working on competing ideas, like what happened initially with software suspend itself.

Source of Information : Linux Journal Issue 182 June 2009

Sunday, August 16, 2009

Content Management Systems

Apart from the ISO images of four FOSS distributions in this month’s DVD, we have also managed to pack in some of the best content management systems (CMS). We hope you deploy and test them all. Well, if you really do, let us know your feedback on them, or write a comparison article if you have the time :-)

Drupal is a FOSS modular framework and CMS written in PHP. It is used as a back-end system for many different types of websites, ranging from small personal blogs to large corporate and political sites. The standard release of Drupal, known as “Drupal core”, contains basic features common to most CMSs. These include the ability to register and maintain individual user accounts, administration menus, RSS-feeds, customizable layout, flexible account privileges, logging, a blogging system, an Internet forum, and options to create a classic brochure-ware website or an interactive community website.